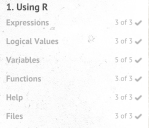

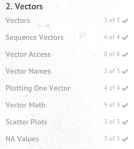

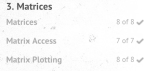

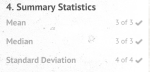

After assembling the data putting it into R I used ggplot2 to answer the following questions:

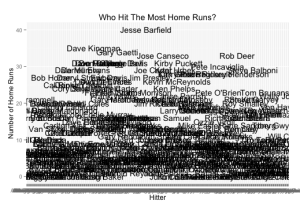

1.Who had the highest number of home runs (HR)? Jesse Barfield.

At first I got a scatter plot with the individual names on the y-axis which would ideally work if there were few enough names, but then I did an anesthetically pleasing way (shown second) with the name of the hitter placed instead of the point. Ideally I would love to add the label to the point on the graph to the left, but with my beginner skills I found using the two visualizations together works the best in this case (because there was a clear winner).

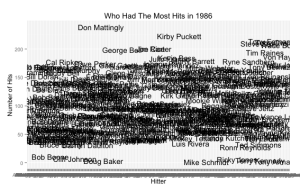

2. Who had the maximum number of hits in 1986? Don Mattingly.

I used the same (not ideal) technique that I used to answer the first questions. Again, I understand that I was only able to do this because there was a clear winner.

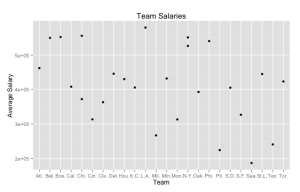

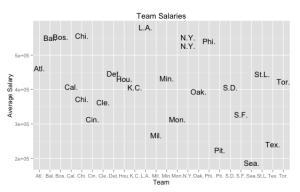

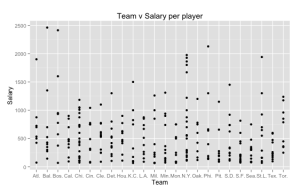

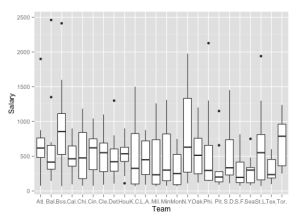

3. Name the second most expensive team in the league?

The best way to answer this question would be to sum all the salaries for each team, but I couldn’t find an effective way to do this with my skills. I used the team data to plot the average salaries for each team (shown in the first two visualizations). So then I used the hitter data to plot the salary verses the teams and got the third visualization. I then created a box and whisker plot per team. Though I can’t say definitively which team spends in total (on all the players) the second most. However, I can answer many other questions like: Chicago has the second highest average salary (shown by the team visualizations shown first). What was weird to me is that this did not coincide with the data from the individual box plot visualizations created with the hitter data. From this data I can conclude that Baltimore spends the most on an individual player and Boston the second; New York middle 50% (inner quartile range) extends the highest, but doesn’t start the highest; The average bar shows that Boston is the highest average salary, and Toronto the second. I attempted to get a stacked bar graph with the sums per team, but I feel like I would be more successful if I created that information with the data itself and then visualized it instead of the other way around.

Specific Goal: Are players paid according to their performance?

To answer this question I created many difference visualizations and will describe them one by one. I did manipulate the data by creating new variables that represent the percentage of hits, runs, home runs, etc. by using the number of hits and dividing it by the number of at bats. Another variable I created was the career percentage where I divided the number of hits, runs, etc. divided by their yearly average of hits, runs, etc. (their career hits, runs, etc. by the number of years they have played in the majors). This variable will be 1 if they are getting about the same amount as they have averaged in their career past, it will be >1 if they are doing much better in the current year and <1 if they are doing much worse in the current year.

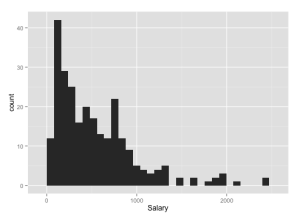

^ First of all I plotted a histogram of the salaries to see the distribution of the salaries, which is as expected with most of the salaries being on the smaller range.

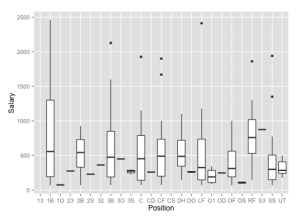

^ Plotted a box and whisker plot on salaries per position to see if there was any particular position that was clearly paid more than the others. However, it looks as though players of different positions are on average paid the same. (If I was continuing the research in this data I would investigate the positions of players only making over say 1,000 to see if there is a pattern within the players who make the most.)

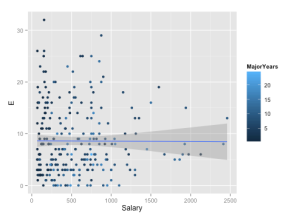

^Plotted salary against errors made using the number of years they’ve played in the majors for the color. The color shows that the those with higher salaries have played in the majors for a little while. The trend line added shows that errors didn’t seem to effect their salary.

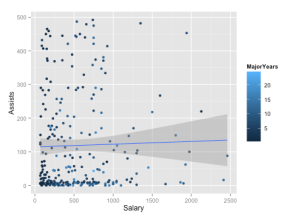

^ Plotting salary against the number of assists surprisingly shows that assists don’t seem to effect their salary either.

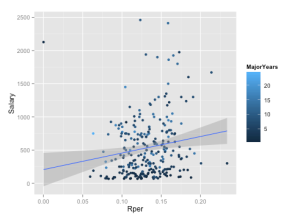

^ Plotting run percentage (runs/at bats) against salary. The trend line shows that as the runs percentage increases so does the salary. This would support the idea that players get paid according to their performance. Outlier on top all seem to be a darker blue and thus are newer players, so their pay makes sense. If they are lighter then they are either not paid according to their hitting/runs skills or they are paid disproportionately to to their skills.

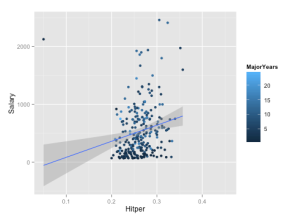

^ Plotting hits percentage (hits/at bats) against salary. The trend line also shows that the hits percentage increases so does the salary. This would support the idea that players get paid according to their performance. Outlier on top all seem to be a darker blue and thus are newer players, so their pay makes sense. If they are lighter then they are either not paid according to their hitting skills or they are paid disproportionately to to their skills.

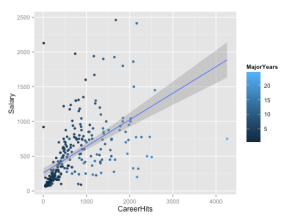

^ Plotting career hits against salary. The trend line shows that with an increase in career hits so does the salary. This would support the idea that players get paid according to their performance. Another thing seen from this visualization the players who’ve been playing longer with very small amount of career hits do not seem to get paid much, with some exceptions. Outlier is the point on the bottom right, with high number of career hits and a low salary, this player could have gotten most of those hits earlier in their career and is thus not playing as well now so they don’t get paid enough. Other outliers on the top left are players with high salaries and a low number of career hits, these players are probably not paid for their hitting (probably a pitcher) or its their first year so their career hits is their current number of hits (all darker blue) or they are not paid accordingly.

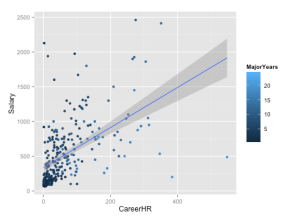

^Plotting career home runs against salary. The trend line shows an increase in salary as career home runs increase. This would support the idea that players get paid according to their performance. The players that have more career home runs that fall under the trend line are all players who haven’t played in the majors for very long, which makes sense that he new players that are hitting well in the first few seasons don’t have high salary contracts yet. This would support the idea that players get paid according to their performance, when you consider that they are getting paid based on past seasons performance. Outliers are the same as previous visualization but with home runs instead of hits.

Overall, the data still calls for more investigation; however at this point I will conclude that players get paid according to their performance. (Players that are shown to have higher performance but with lower salaries are assumed to not be of higher performance in a different category. Example: A newer player to the league with amazing unexpected stats then when they made the salary contract or a player that has great stats but just had an injury and was back from the DL. And players that are shown to have lower performance but with higher salaries are assumed to be of higher performance in a different category. Example: an amazing pitcher that makes a lot of money but is not the best hitter, therefore their hitting stats will be very low, but will be making a lot of money.